In the world of GPUs, the year 2022 will be a milestone in its history. Intel has fulfilled its promise to re-enter the market for discrete graphics cards, Nvidia has pushed the size and price of graphics cards to astronomical levels, and AMD has introduced CPU technology into the field of graphics cards. News headlines are filled with stories of disappointing performance, melting cables, and forged frames.

The frenzy of GPUs has flooded into forums, and PC enthusiasts are equally surprised and shocked by the development of the graphics card market. Therefore, it is easy to forget that the chips used in the latest products are the most complex and powerful home computer chips ever.

Next, let's delve into the architecture of all suppliers, peel off the layers, and see what is new, what they have in common, and what this means for ordinary users.

Advertisement

The article will mainly analyze the following aspects:

Overall GPU architecture

Shader core structure

Ray tracing units and functions

Memory hierarchy (cache and DRAM)

Chip packaging and process nodes

Display and media engineWhat's Next for GPUs?

An Overview of the Overall GPU Architecture: Starting from the Top

Let's begin with a significant aspect of this article—a performance comparison is not the focus. Instead, we will look at how everything inside a GPU is arranged, understanding the methodological differences between AMD, Intel, and Nvidia in designing graphics processors by examining statistics and numbers.

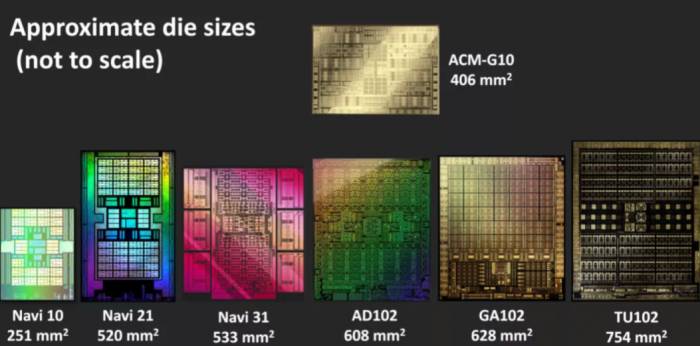

We will start by examining the overall GPU composition of the largest chips available using the architectures we are studying. It should be emphasized that Intel's product targets a different market from AMD or Nvidia, as it is largely a mid-range graphics processor.

These three chips differ significantly in size not only from each other but also from similar chips using previous architectures. All this analysis is purely for understanding what lies beneath these three processors. Before breaking down the basic components of each GPU, we will check the overall structure—shader cores, ray tracing capabilities, memory hierarchy, and display and media engines.

AMD Navi 31

In alphabetical order, the first is AMD's Navi 31, which is the largest RDNA 3-driven chip they have announced to date. Compared to Navi 21, we can see a noticeable increase in the number of components of their previous high-end GPU.

The Shader Engine (SE) contains fewer Compute Units (CUs), reduced from 200 to 16, but now there are a total of 6 SEs—two more than before. This means Navi 31 has up to 96 CUs, deploying a total of 6144 Stream Processors (SPs). AMD has fully upgraded the SPs for RDNA 3, and we will discuss this issue later in the article.Translate the following passage into English: Each shading engine also includes a unit specifically handling rasterization, a primitive engine for triangle setup, 32 render output units (ROP), and two 256kB L1 caches. The last one, now twice the size, but the ROP itself remains unchanged.

AMD has not made many changes to the rasterizer and primitive engine either—said 50% improvement is for the entire chip, as its SE is 50% more than the Navi 21 chip. However, the way SE processes instructions has changed, such as processing multiple drawing commands faster and better managing pipeline stages, which should reduce the waiting time for CU to continue performing another task.

The most obvious change is the one that received the most rumors and gossip before the release in November—GPU packaging in the form of a Chiplet. With years of experience in this field, AMD's choice to do so is logical, but it is entirely for cost/manufacturing reasons, not performance.

We will study this in more detail later in this article, so for now, let's just focus on which parts are where. In Navi 31, the memory controller and its related final layer cache partition are located in an independent small chip (called MCD or memory cache chip) around the main processor (GCD, graphics computing chip).

Due to the need to provide more SEs, AMD has also increased the number of MCs by 50%, so the total bus width of the GDDR6 global memory is now 384 bits. This time, the total amount of Infinity Cache is less (96MB vs. 128MB), but the larger memory bandwidth offsets this.

Intel ACM-G10

Next is Intel and the ACM-G10 chip (formerly known as DG2-512). Although this is not the largest graphics processor produced by Intel, it is the largest consumer graphics processor by Intel.

The block diagram of the ACM-G10 chip is a fairly standard arrangement, which looks more like Nvidia than AMD. It has a total of 8 rendering slices, each containing 4 x cores, totaling 512 vector engines (the Intel ACM-G10 chip is equivalent to AMD's stream processors and Nvidia's CUDA cores).Each rendering slice also includes a basic unit, rasterizer, depth buffer processor, 32 texture units, and 16 ROPs. At first glance, this GPU appears to be quite large, as 256 TMUs and 128 ROPs are more than those found in the Radeon RX 6800 or GeForce RTX 2080.

However, AMD's RNDA 3 chip contains 96 compute units, each with 128 ALUs, while the ACM-G10 has a total of 32 Xe cores, each with 128 ALUs. Therefore, in terms of the number of ALUs alone, Intel's Alchemist GPU is one-third of AMD's. But as we will see later, a large number of ACM-G10's chips are handed over to different digital computing units.

Compared with Intel's first Alchemist GPU released through OEM suppliers, this chip has all the characteristics of a mature architecture in terms of the number of components and structural arrangement.

NVIDIA AD102

We conclude our introduction to different layouts with NVIDIA's AD102, which is their first GPU to use the Ada Lovelace architecture. It doesn't seem to be much different from its predecessor, the Ampere GA102, except that it is much larger. Anyway, it is indeed the case.

NVIDIA uses a component hierarchy of graphics processing clusters (GPUs), which includes 6 texture processing clusters (TPCs), each containing 2 streaming multiprocessors (SMs). This arrangement has not changed with Ada, but the total number definitely has...

In the complete AD102 chip, the number of GPCs has increased from 7 to 12, so there are now a total of 144 SMs, with a total of 18,432 CUDA cores. Compared to the 6,144 SPs in Navi 31, this seems to be an exorbitantly high number, but AMD and Nvidia calculate their components differently.Despite this greatly simplifying the issue, an Nvidia SM is equivalent to an AMD CU—both containing 128 ALUs. Thus, Navi 31 is twice that of Intel's ACM-G10 (counting only ALUs), while AD102 is 3.5 times.

This is why it is unfair to make any direct performance comparisons between them when the scale of the chips is so obviously different. However, once they are incorporated into graphics cards, pricing, and sales, the situation is completely different.

What we can compare, however, is the smallest repeating part of the three processors.

Shader Cores: The Brain of the GPU

From an overview of the entire processor, let's now delve into the core of the chip to look at the basic numerical computation part of the processor: the shader core.

These three manufacturers use different terms and phrases when describing their chips, especially when talking about their overview diagrams. So in this article, we will use our own images, using common colors and structures, making it easier to see what is the same and what is different.

AMD RDNA 3

The smallest unified structure in the GPU's shading part of AMD is called a Dual Compute Unit (DCU). In some documents, it is still referred to as a Workgroup Processor (WGP), while other documents call it a Compute Unit Pair.

Please note that if certain elements are not shown in these diagrams (such as constant caches, double-precision units), this does not mean they do not exist in the architecture.In many aspects, the overall layout and structural elements do not differ significantly from RDNA 2. Two compute units share some cache and memory, with each compute unit containing two sets of 32 stream processors (SPs).

The new feature of the 3rd edition is that each SP now contains twice as many arithmetic logic units (ALUs) as before. Now each compute unit has two sets of SIMD64 units, with each set having two data ports—one for floating-point, integer, and matrix operations, and the other solely for floating-point and matrix operations.

AMD indeed uses separate SPs for different data formats—compute units in RDNA 3 support operations using FP16, BF16, FP32, FP64, INT4, INT8, INT16, and INT32 values.

Another significant new feature is the emergence of what AMD calls AI Matrix Accelerators.

Unlike the architectures of Intel and Nvidia that we will soon see, they do not act as separate units—all matrix operations use SIMD units, and any such computation (called Wave Matrix Multiply Accumulate, WMMA) will utilize the entire set of 64 ALUs.

At the time of writing this article, the exact nature of the AI accelerators is unclear, but it may simply be the circuitry related to processing instructions and the large amount of data involved to ensure maximum throughput. In their Hopper architecture, it may have similar functionality to Nvidia's Tensor Memory Accelerator.

The changes are relatively minor compared to RDNA 2—the old architecture could also handle 64-thread wavefronts (also known as Wave64), but these were dispatched in two cycles and used two SIMD32 blocks in each compute unit. Now, all of this can be done in one cycle and with just one SIMD block.

In previous documentation, AMD stated that Wave32 is typically used for compute and vertex shaders (and possibly ray shaders), while Wave 64 is mainly used for pixel shaders, with the driver compiling shaders accordingly. Thus, the shift to single-cycle Wave64 instruction issues will provide a boost for games that heavily rely on pixel shaders.However, all this additional power needs to be properly harnessed to make full use of it. This is the case for all GPU architectures, and to do so, they all require a large thread load (which also helps to hide the inherent latency associated with DRAM).

Thus, with the doubling of ALUs, AMD has driven the demand for programmers to use as much instruction-level parallelism as possible. This is nothing new in the graphics field, but a significant advantage of RDNA over AMD's old GCN architecture is that it does not require as many threads to be fully utilized. Considering the complexity of modern rendering in games, developers will have more work to do when writing shader code.

Intel Alchemist

Now let's turn to Intel and take a look at the DCU equivalent in the Alchemist architecture, called the Xe Core (which we will abbreviate as XEC). At first glance, these look absolutely huge compared to AMD's structure.

A single DCU in RDNA 3 contains 4 SIMD64 blocks, while Intel's XEC contains 16 SIMD8 units, each managed by its own thread scheduler and scheduling system. Like AMD's stream processors, the so-called vector engines in "Alchemist" can handle integer and floating-point data formats. Although it does not support FP64, this is not a big problem in games.

Intel has always used relatively narrow SIMD—-in products like Gen11, the SIMD used is only 4 wide (i.e., processing 4 threads at the same time), and in the 12th generation, the width only doubled (for example, used in their Rocket Lake CPUs).

But considering that the gaming industry has been accustomed to using SIMD32 GPUs for many years, games have also been encoded accordingly, so the decision to retain narrow execution blocks seems counterproductive.

AMD's RDNA 3 and Nvidia's Ada Lovelace processing blocks can issue 64 or 32 threads in one cycle, while Intel's architecture requires 4 cycles to achieve the same result on a VE—-so each XEC has 16 SIMD units.

However, this means that if the game is not encoded in a way that ensures the VE is fully occupied, the SIMD and related resources (cache, bandwidth, etc.) will be idle. A common theme in Intel Arc series graphics card benchmarking results is that they tend to perform better in games at higher resolutions and/or with many complex modern shader routines.This part is due to the high degree of unit subdivision and resource sharing. The micro-benchmark analysis of the Chips and Cheese website shows that despite a large number of ALUs, the architecture still struggles to achieve proper utilization.

Let's take a look at other aspects of XEC. It is currently unclear how large the 0-level instruction cache is, but AMD's is a 4-way instruction cache (because it serves 4 SIMD blocks), and Intel's must be a 16-way instruction cache, which adds complexity to the cache system.

Intel also chose to provide dedicated matrix operation units for the processor, one unit per vector engine. Having so many units means that a significant part of the die is dedicated to processing matrix math.

AMD uses the SIMD units of the DCU to achieve this, while Nvidia has four relatively large tensor/matrix units per SM. Intel's approach seems a bit excessive because they have a separate architecture called Xe-HP for computing applications.

Another strange design seems to be the load/store (LD/ST) units in the processing block. Not shown in our diagram, they manage memory instructions from threads and move data between the register file and L1 cache. Ada Lovelace, like Ampere, has four per SM partition, totaling 16. RDNA 3 also has dedicated LD/ST circuits as part of the texture unit, just like its predecessor, in each CU.

Intel's Xe-HPG presentation shows that there is only one LD/ST per XEC, but in reality, it may consist of more independent units internally. However, in the optimization guide of OneAPI, there is a diagram that suggests the LD/ST registers a register file per cycle. If this is the case, then Alchemist will always struggle to achieve maximum cache bandwidth efficiency because not all files are served at the same time.

Nvidia Ada Lovelace

The last processing block to focus on is Nvidia's streaming multiprocessor (SM) - the GeForce version of DCU/XEC. This structure has not changed much compared to the Turing architecture of 2018. In fact, it is almost identical to Ampere.

Some units have been adjusted to improve their performance or feature set, but in most cases, there is not much new to talk about. In fact, there may be, but it is well known that Nvidia is reluctant to reveal too much about the internal operations and specifications of its chips. Intel provides more details, but this information is usually hidden in other documents.However, to summarize the structure, the SM is divided into four partitions. Each processor has its own L0 instruction cache, thread scheduler, and scheduling unit, as well as a 64 kB register file section paired with a SIMD32 processor.

Just as in AMD's RDNA 3, the SM supports dual-issue instructions, with each partition being able to process two threads concurrently, one using FP32 instructions and the other using either FP32 or INT32 instructions.

Nvidia's Tensor Cores are now in their fourth revision, but this time, the only notable change is the inclusion of the FP8 Transformer Engine from their Hopper chip—the original throughput data remains unchanged.

The addition of low-precision floating-point formats means that the GPU should be better suited for AI training models. Tensor Cores still provide the sparsity features of Ampere, which can offer up to twice the throughput.

Another improvement lies in the Optical Flow Accelerator (OFA) engine (not shown in our chart). This circuit generates optical flow fields, which are used as part of the DLSS algorithm. In Ampere, the OFA's performance is twice that of the OFA, with the additional throughput being used for their latest version of the temporal anti-aliasing upscaling DLSS 3.

DLSS 3 has faced quite a bit of criticism, mainly around two aspects: the frames generated by DLSS are not "real," and the process adds extra rendering chain latency. The first approach is not entirely invalid, as the system first allows the GPU to render two consecutive frames, storing them in memory, and then uses a neural network algorithm to determine what the intermediate frame looks like.

Then, the current chain returns to the first rendered frame and displays that frame, followed by the DLSS frame, and then the second rendered frame. Since the game engine has not yet looped for the intermediate frame, the screen refreshes without any potential input. Because two consecutive frames need to be paused instead of being presented, any input that has already been polled for those frames will also be paused.Will DLSS 3 become popular or commonplace remains to be seen.

Although Ada's SMs are very similar to Ampere, there are significant changes in the RT cores, which we will address soon. For now, let's summarize the computational capabilities of the repetitive structures of GPUs from AMD, Intel, and Nvidia.

Block Processing Comparison

For standard data formats, we can compare the capabilities of SMs, XECs, and DCUs by looking at the number of operations per clock cycle. Please note that these are peak data and may not be achievable in reality.

Nvidia's data has not changed after Ampere, while RDNA 3's data has doubled in some areas. The fact that "Alchemist" operates on another level for matrix operations should be emphasized again, as these are peak theoretical values.

Considering that Intel's graphics division heavily relies on data centers and computing, just like Nvidia, it is not surprising to see the architecture dedicating so much die space to matrix operations. The lack of FP64 functionality is not an issue, as this data format has not been used in games, and the feature is present in their Xe-HP architecture.

In terms of matrix/tensor operations, Ada Lovelace and Alchemist are theoretically stronger than RDNA 3, but since we are focusing on GPUs mainly used for gaming workloads, these dedicated units primarily provide acceleration for algorithms involving DLSS and XeSS—algorithms that use convolutional autoencoder neural networks (CAENN) to scan images for artifacts and correct them.

AMD's temporal upscaling (FidelityFX Super Resolution, FSR) does not use CAENN, as it is primarily based on the Lanczos resampling method, followed by some image correction routines processed by DCUs. However, in the RDNA 3 release, the next version of FSR was briefly introduced, citing a new feature called Fluid Motion Frames. With FSR 2.0's performance improvements up to twice as much, the general consensus is that this may involve frame generation, similar to DLSS 3, but whether it involves any matrix operations is unclear.

Now everyone can perform ray tracing

With the launch of their Arc graphics card series using the Alchemist architecture, Intel joined AMD and Nvidia in providing dedicated accelerators for various algorithms using ray tracing in graphics. Both Ada and RDNA 3 include significantly updated RT units, so it is necessary to see what is new and different.Starting with AMD, the most significant change in their ray accelerator is the addition of hardware to improve the traversal of the bounding volume hierarchy (BVH). In the 3D world, these data structures are used to accelerate the determination of the surface hit by a ray.

In RDNA 2, all of this work is processed through the compute units, and to some extent, it still is. However, for DXR (Microsoft's ray tracing API), there is hardware support for ray flag management.

Using these can greatly reduce the number of times the BVH needs to be traversed, reducing cache bandwidth and the overall load on the compute units. Essentially, AMD has been focusing on improving the overall efficiency of the system they introduced in their previous architecture.

Additionally, the hardware has been updated to improve box sorting (making traversal faster) and culling algorithms (skipping testing of empty boxes). Coupled with improvements to the cache system, AMD claims that the ray tracing performance has increased by 80% at the same clock speed compared to RDNA 2.

However, in games that use ray tracing, such improvements do not translate into an 80% increase in frames per second - performance in these situations is affected by many factors, and the functionality of the RT units is just one of them.

Since Intel is a newcomer to ray tracing games, there are no improvements. Instead, we are simply told that their RT units handle the BVH traversal and intersection calculations between rays and triangles. This makes them more similar to Nvidia's system than AMD's, but there is not much available information about them.

But we know that each RT unit has an unspecified size cache for storing BVH data and a separate unit for analyzing and sorting ray shader threads to improve SIMD utilization.Each XEC is paired with an RT unit, with a total of four rendering slices per unit. Early tests of the A770 with ray tracing enabled in the game suggest that, regardless of the structure adopted by Intel, Alchemist's overall capability in ray tracing is at least as good as the Ampere chip, and slightly better than the RDNA 2 model.

However, let us reiterate, ray tracing also puts a heavy pressure on the shading cores, cache system, and memory bandwidth, so it is not possible to extract the performance of the RT unit from such benchmark tests.

For the Ada Lovelace architecture, Nvidia has made many changes and has made quite a demand for performance improvement compared to Ampere. The accelerator used for ray-triangle intersection calculations is said to have twice the throughput, and the BVH traversal for non-opaque surfaces is now said to be twice as fast. The latter is important for objects using textures with an alpha channel (transparency), such as leaves on trees.

When a ray hits a fully transparent part of such a surface, it should not produce a hit result - the ray should pass through directly. However, in current games, to determine this accurately, it is necessary to process multiple other shaders. Nvidia's new opacity micromap engine breaks down these surfaces into more triangles and then determines what exactly happened, reducing the number of ray shaders required.

Two further increases in Ada's ray tracing capabilities are the reduction in BVH construction time and memory occupancy (respectively claimed to be 10 times faster and 20 times smaller), as well as the structure for reordering threads for ray shaders, providing better efficiency. However, the former does not require developers to change the software, and the latter is currently only accessible through Nvidia's API, so it does not benefit current DirectX 12 games.

When we tested the ray tracing performance of the GeForce RTX 4090, the average frame rate drop after enabling ray tracing was slightly less than 45%. With the Ampere-driven GeForce RTX 3090 Ti, the drop was 56%. However, this improvement cannot be entirely attributed to the improvement of the RT core, as the 4090's shading throughput and cache are much larger than the previous models.

We have not yet seen what the ray tracing improvements of RDNA 3 are, but it is worth noting that no GPU manufacturer expects RT to be used in isolation - that is, upgrades are still needed to achieve high frame rates.

Fans of ray tracing may be a bit disappointed, as the new round of graphics processors has not made any significant progress in this area, but there has been a lot of progress since Nvidia's Turing architecture first appeared in 2018.Memory: Driving the Data Superhighway

The way a GPU processes data differs from other chips, making it crucial for the ALU to receive data efficiently. In the early days of PC graphics processors, there was almost no cache inside, and the global memory (the RAM used by the entire chip) was very slow DRAM. Even just 10 years ago, the situation was not much better.

So, let's delve into the current situation, starting with AMD's memory hierarchy in its new architecture. Since the first iteration, RDNA has used a complex multi-level memory hierarchy. The most significant change occurred last year when a large amount of L3 cache was added to the GPU, up to 128MB in some models.

The situation for the third round is still the same, but with some subtle changes.

The register file has now increased by 50% (they had to do this to cope with the increase in ALUs), and the first three levels of cache have all grown larger. The sizes of L0 and L1 have doubled, with L2 cache reaching up to 2MB, totaling 6MB in Navi 31.

The L3 cache has actually been reduced to 96MB, but there is a good reason for this—it is no longer located within the GPU chip. We will discuss this aspect in more detail in the latter part of this article.

Due to the wider bus widths between different cache levels, the overall internal bandwidth is also higher. Clock by clock, there is an additional 50% between L0 and L1, and the same increase between L1 and L2. But the biggest improvement is between L2 and the external L3—it is now a total of 2.25 times wider.

The L2 to L3 peak bandwidth of Navi 21 used in the Radeon RX 6900 XT is 2.3 TB/s; thanks to AMD's Infinity Fanout interconnect, Navi 31 in the Radeon RX 7900 XT increases it to 5.3 TB/s.Separating the L3 cache from the main chip does indeed increase latency, but this is offset by the Infinity Fabric system using a higher clock—overall, the L3 latency is reduced by 10% compared to RDNA 2.

RDNA 3 is still designed to use GDDR6, not the slightly faster GDDR6X, but the high-end Navi 31 chip has two additional memory controllers, increasing the global memory bus width to 384 bits.

AMD's cache system is certainly more complex than those of Intel and Nvidia, but Chips and Cheese's micro-benchmark tests on RDNA 2 show that it is a very efficient system. Latency is low, and it provides the background support needed for the CU to achieve high utilization rates, so we can expect the same for the system used in RDNA 3.

Intel's memory hierarchy is slightly simpler, mainly a two-level system (ignoring smaller caches, such as the constant cache). There is no L0 data cache, only 192kB of L1 data and shared memory.

Like Nvidia, this cache can be dynamically allocated, with up to 128kB available as shared memory. In addition, there is a separate 64kB texture cache (not shown in the diagram).

For a chip designed for mid-range market graphics cards (the DG2-512 used in the A770), the L2 cache is very large, with a total of 16MB. The data width is also appropriately large, with a total of 2048 bytes per clock between L1 and L2. This cache contains eight partitions, each serving a 32-bit GDDR6 memory controller.

However, analysis suggests that despite the abundant cache and available bandwidth, the Alchemist architecture is not particularly good at fully utilizing them, and it requires workloads with a high number of threads to mask its relatively poor latency.

Nvidia retains the same memory structure as Ampere, with each SM having a 128kB cache that serves as L1 data storage, shared memory, and texture cache. The amount available for different roles is dynamically allocated. There is currently no news about any changes to L1 bandwidth, but in Ampere, it was 128 bytes per clock per SM. Nvidia has never explicitly stated whether this figure is cumulative, combined for read and write, or only for one direction.

If Ada is at least the same as Ampere, then the total L1 bandwidth for all SMs is a huge bandwidth of 18 kB per clock—far greater than RDNA 2 and Alchemist.It must be emphasized once again that these chips cannot be directly compared, as Intel's pricing and marketing are positioned as mid-range products, while AMD has clearly stated that Navi 31 was never designed to compete with Nvidia's AD102. Its competitor is AD103, which is much smaller than AD102.

The most significant change in the memory hierarchy is that the second-level cache expands to 96MB in a complete AD102 die—16 times that of its predecessor, GA102. Like Intel's system, L2 is partitioned and paired with a 32-bit GDDR6X memory controller to achieve a DRAM bus width of up to 384 bits.

Larger cache sizes generally have longer latencies compared to smaller caches, but due to the increase in clock speed and some improvements in the bus, Ada Lovelace shows better cache performance than Ampere.

If we compare these three systems, Intel and Nvidia adopt the same approach to L1 cache—it can be used as a read-only data cache or as a compute shared memory. In the latter case, the GPU needs to be explicitly instructed by software to use it in this format, and the data is retained only when the threads using it are active. This adds complexity to the system, but it provides a useful boost to computational performance.

In RDNA 3, the "L1" data cache and shared memory are divided into two 32kB L0 vector caches and a 128kB local data share. The L1 cache that AMD refers to is actually a set of four shared stepping stones between the DCU and L2 cache for read-only data.

Although the cache bandwidth is not as high as Nvidia's, the multi-layer approach helps to address this issue, especially when the DCU is not fully utilized.

High-end cards have a lot of DRAM, but the speed is still relatively slow.

The huge processor-level cache system is generally not the best choice for GPUs, which is why we have not seen more than 4 or 6MB in previous architectures. However, AMD, Intel, and Nvidia all have a large amount of cache in their GPUs. The last layer is to cope with the relatively slow growth of DRAM speed.Adding a large number of memory controllers to a GPU can provide ample bandwidth, but at the cost of increased chip size and manufacturing overhead, and the use of alternatives such as HBM3 is much more expensive.

We have yet to see how AMD's system will perform in the end, but their four-layer approach in RDNA 2 has performed well compared to Ampere, and is much better than Intel's. However, as Ada packages more L2, the competition is no longer so simple.

Chip packaging and process nodes: Different ways to build power plants

AMD, Intel, and Nvidia all have one thing in common - they all use TSMC to manufacture their GPUs.

AMD used two different nodes for the GCD and MCD in Navi 31, with the former made on the N5 node and the latter made on the N6 (an enhanced version of N7). Intel also uses N6 in all of its Alchemist chips. For Ampere, Nvidia used Samsung's old 8nm process, but for Ada, they switched back to TSMC and its N4 process, which is a variant of N5.

N4 has the highest transistor density of all nodes and the best performance-to-power ratio, but when AMD launched RDNA 3, they emphasized that only the density of the logic circuit has increased significantly.

SRAM (for high-speed cache) and analog systems (for memory, system, and other signal circuits) have relatively smaller scaling. Coupled with the increase in wafer price for new process nodes, AMD decided to use the slightly older and cheaper N6 to manufacture MCD, as these small chips are mainly SRAM and I/O.

In terms of die size, the GCD is 42% smaller than Navi 21, at 300 mm2. Each MCD is only 37 mm2, so the combined die area of Navi 31 is roughly the same as its predecessor. AMD only announced the total number of transistors for all the chips, but this new GPU has 58 billion, making it their "largest" consumer graphics processor ever.

To connect each MCD to the GCD, AMD uses what they call high-performance fan-out - dense wiring that takes up very little space. Infinity Links - AMD's proprietary interconnect and signal system - operate at speeds up to 9.2Gb/s, with a link width of 384 bits for each MCD, and a bandwidth of 883GB/s (bidirectional) from MCD to GCD.For a single MCD, this is equivalent to the global memory bandwidth of a high-end graphics card. Navi 31 has all six, with a total bandwidth from L2 to MCD reaching 5.3TB/s.

Compared to traditional monolithic chips, using complex fan-out means that the cost of bare-die packaging will be higher, but the process is scalable—different SKUs can use the same GCD but with different numbers of MCDs. Smaller individual small-chip chips should improve wafer yield, but there is no indication whether AMD has included any redundancy in the design of the MCD.

If not, it means that any small chip with defects in SRAM will prevent the use of that part of the memory array, and they will have to box or not use it at all for low-end model SKUs.

So far, AMD has only released two RDNA 3 graphics cards (Radeon RX 7900 XT and XTX), but in both models, there are 16MB of cache for each MCD. If the next round of Radeon cards adopts a 256-bit memory bus and 64MB of L3 cache, they will also need to use "perfect" 16MB chips.

However, due to their very small area, a single 300mm wafer may produce more than 1500 MCDs. Even if 50% of them have to be scrapped, this is still enough to provide 125 Navi 31 packages.

We still need some time to determine the actual cost-effectiveness of AMD's design, but the company is fully committed to using this method now and in the future, although it is limited to larger GPUs. Budget RDNA 3 models will have much less cache and will continue to use monolithic manufacturing methods because it is more cost-effective to manufacture them in this way.

Intel's ACM-G10 processor is 406mm2, with a total of 2.17 billion transistors, and is between AMD's Navi 21 and Nvidia's GA104 in terms of the number of components and chip area.

This actually makes it a relatively large processor, which is why Intel's choice of the GPU market segment seems a bit strange. The Arc A770 graphics card uses the complete ACM-G10 chip, competing with similar products such as Nvidia's GeForce RTX 3060, which uses a chip size and the number of transistors only half of Intel's.So why is it so large? There are two possible reasons: the 16MB L2 cache and the large number of matrix units in each XEC. The decision to have the former is logical, as it alleviates the pressure on global memory bandwidth, but the latter could easily be considered excessive for the segment it is being sold to. The RTX 3060 has 112 Tensor cores, while the A770 has 512 XMX units.

Another odd choice by Intel is the use of TSMC N6 to manufacture the Alchemist die, instead of their own facilities. The official statement on this matter cites factors such as cost, wafer fab capacity, and chip operating frequency.

This suggests that Intel's equivalent production facilities (using the renamed Intel 7 node) are unable to meet the expected demand, with its Alder and Raptor Lake CPUs taking up most of the capacity.

They will compare the relative decline in CPU output and how this will affect revenue with the gains they will get from using Alchemist for their new GPU.

Where AMD has leveraged its multi-chip expertise and developed new technologies to create the large RDNA 3 GPU, Nvidia has stuck with a monolithic design for the Ada series. The GPU company has extensive experience in manufacturing ultra-large processors, although the 608mm² AD102 is not the largest chip it has released in terms of physical size (that honor goes to the 826mm² GA100).

However, Nvidia has 76.3 billion transistors, and its component count is far ahead of any consumer GPU seen so far.

In comparison, the GA102 used for the GeForce RTX 3080 and above seems quite light, with only 26.8 billion. The number of SMs has increased by 71%, and the L2 cache has increased by 1500%.

Large and complex chips like this are always difficult to achieve perfect wafer yield, which is why previous high-end Nvidia GPUs have spawned a large number of SKUs. Typically, with the release of a new architecture, their professional graphics card series (such as A series, Tesla, etc.) would be released first.When Ampere was released, the GA102 was featured in two consumer cards at launch and eventually found its way into 14 different products. So far, Nvidia has only used the AD102 in two products: the GeForce RTX 4090 and the RTX 6000. However, the latter has been unavailable for purchase since its launch last September.

The RTX 4090 utilizes the die with the best end of the merging process, disabling 16 SMs and 24MB of L2 cache, while the RTX 6000 only disables two SMs. This raises the question: where are the rest of the dies?

But since no other products are using the AD102, we can only assume that Nvidia is stockpiling them, although the purpose for other products is still unclear.

Two months after the architecture's launch, only two cards are still using it.

The GeForce RTX 4080 uses the AD103, which has 379mm² and 45.9 billion transistors, completely unlike its big brother—having a smaller die (80 SMs, 64MB L2 cache) should result in better yield, but there is still only one product using it.

They also released another RTX 4080, one that uses a smaller AD104, but due to the overwhelming criticism, they canceled it at launch. It is expected that this GPU will now be used to launch the RTX 4070 series.

Nvidia obviously has a large number of GPUs based on the Ada architecture, but it seems reluctant to ship them as well. One reason might be that they are waiting for the Ampere-powered graphics cards to clear the shelves. Another is that it dominates the general user and workstation market and may feel that it doesn't need to offer anything else right now.

But given the significant improvement in raw computing power provided by the AD102 and 103, the scarcity of Ada professional cards is a bit puzzling—the industry is always eager for more processing power.

Superstar DJ: Display and Media EngineWhen discussing the media and display engines of GPUs, they typically adopt a background marketing approach compared to aspects such as DirectX 12 capabilities or the number of transistors. However, with the game streaming industry generating billions of dollars in revenue, we are beginning to see more efforts to develop and promote new display features.

For RDNA 3, AMD has updated many components, most notably support for DisplayPort 2.1 (as well as HDMI 2.1a). Given that VESA, the organization overseeing the DisplayPort specification, only released version 2.1 a few months ago, it is an unusual move for GPU vendors to adopt the system so quickly.

The fastest DP transmission mode supported by the new display engine is UHBR13.5, with a maximum transmission rate of up to 54 Gbps for 4 channels. This is sufficient for 4K resolution, 144Hz refresh rate, without any compression, and standard timing.

Using DSC (Display Stream Compression), DP2.1 connections allow for up to 4K@480Hz or 8K@165Hz—significantly improved compared to DP1.4a used in RDNA 2.

Intel's Alchemist architecture features a display engine with DP 2.0 (UHBR10, 40 Gbps) and HDMI 2.1 outputs, but not all Arc series graphics cards using this chip can utilize the maximum functionality.

Although the ACM-G10 is not aimed at high-resolution gaming, using the latest display connection specifications means that esports monitors (such as 1080p, 360Hz) can be used without any compression. The chip may not be able to deliver such high frame rates in these types of games, but at least the display engine can.

As refresh rates climb, faster display connections are needed.

AMD and Intel's support for fast transmission modes in DP and HDMI is the kind of thing you would expect from a brand-new architecture, so Nvidia's choice not to do so with Ada Lovelace is a bit incongruous.All transistors of AD102 (almost the same as when combined with Navi 31 and ACM-G10) only have a display engine with DP1.4a and HDMI 2.1 output. For DSC, the former is already good enough for 4K@144Hz, but it is obviously a missed opportunity when competitors support it without compression.

The media engine in the GPU is responsible for the encoding and decoding of video streams, and all three vendors have a rich feature set in their latest architectures.

In RDNA 3, AMD has added full synchronous encoding/decoding for the AV1 format (only decoding in the previous RDNA 2). There is not much information about the new media engine, except that it can handle two H.264/H.265 streams simultaneously, with a maximum rate of 8K@60Hz for AV1. AMD also briefly mentioned "AI-enhanced" video decoding, but did not provide more details.

Intel's ACM-G10 has a similar range of capabilities, available for encoding/decoding of AV1, H.264, and H.265, but like RDNA 3, details are very scarce. Some early tests of the first Alchemist chips in Arc desktop graphics cards suggest that the media engine is at least as good as the media engines provided by AMD and Nvidia in their previous architectures.

Ada Lovelace follows AV1 encoding and decoding, and Nvidia claims that the new system's encoding efficiency is 40% higher than H.264 - on the surface, video quality is improved by 40% when using the new format.

High-end GeForce RTX 40 series graphics cards will be equipped with GPUs with two NVENC encoders, allowing you to choose to encode 8K HDR at 60Hz or improve video export parallelization, with each encoder handling half a frame at the same time.

With more information about the system, a better comparison can be made, but since the media engine is still seen as having a poor relationship with the rendering and computing engines, we will have to wait until each supplier puts their latest architecture cards on the shelf, and we further review the issue before we can make a better comparison.

What is the next step for GPUs?

There are already three suppliers in the desktop GPU market, and it is clear that each has its own approach to graphics processor design, although Intel and Nvidia have similar ways of thinking.For them, Ada and Alchemist are somewhat like a jack of all trades, applicable to various gaming, scientific, media, and data workloads. The ACM-G10's high emphasis on matrix and tensor computations and its reluctance to completely redesign its GPU layout indicate that Intel leans more towards science and data rather than gaming, but considering the potential growth in these fields, this is understandable.

For the last three architectures, Nvidia focuses on improving what is already good and reducing various bottlenecks in the overall design, such as internal bandwidth and latency. However, while Ada is a natural improvement on Ampere, which is a theme Nvidia has followed for many years, AD102 stands out as an evolutionary oddity when you look at the absolute scale of the number of transistors.

Compared to GA102, the difference is very significant, but this huge leap raises many questions. The first question is, for Nvidia, would AD103 be a better choice for their highest-end consumer product than AD102?

As used in the RTX 4080, AD103's performance has improved considerably compared to the RTX 3090, and like its big brother, the 64MB of L2 cache helps offset the relatively narrow 256-bit global memory bus width.

At 379mm², it's smaller than the GA104 used in the GeForce RTX 3070, so it's much more profitable to manufacture than AD102. It also has the same number of small cores as the GA102 chip, which ultimately found its place in 15 different products.

Another question worth asking is where Nvidia will go in terms of architecture and manufacturing? Can they achieve a similar level of scaling while still adhering to a single-chip design?

AMD's choice for RDNA 3 highlights a potential path for competition. By moving the worst-scaled (in the new process node) die part to a separate small chip, AMD has been able to successfully continue the large manufacturing and design leap between RDNA and RDNA 2.

Although not as large as AD102, Navi 31 still has 58 billion transistors of silicon—more than double that of Navi 21 and more than five times that of the original RDNA GPU Navi 10 (although that was not intended to be a halo product).The achievements of AMD and Nvidia are not accomplished in isolation. The substantial increase in the number of GPU transistors is only possible due to the fierce competition between TSMC and Samsung to become the primary manufacturers of semiconductor equipment.

Both are committed to increasing the transistor density of logic circuits while continuing to reduce power consumption. Samsung began mass production of its 3nm process earlier this year. TSMC has been doing the same thing and has a clear roadmap for current node improvements and their next major process.

It is currently unclear whether Nvidia will copy AMD's design manual and adopt the Chiplet layout in the successor to Ada, but the next 14 to 16 months may be decisive. If RDNA 3 proves to be a financial success, both in terms of revenue and total shipments, then Nvidia is very likely to follow suit.

However, the first chip to use the Ampere architecture is GA100—a data center GPU with a size of 829mm2 and 54.2 billion transistors. It is manufactured by TSMC, using their N7 node (the same as RDNA and most of the RDNA 2 series). Using N4 to manufacture AD102 allows Nvidia to design a GPU with a transistor density that is almost double that of its predecessor.

GPUs remain one of the most outstanding engineering technologies in desktop PCs!

So, is it possible to achieve this with N2 in the next architecture? It is possible, but the significant increase in cache (with poor scalability) indicates that even if TSMC achieves some impressive numbers in its future nodes, controlling the size of the GPU will become increasingly difficult. Intel is already using small chips, but only in its massive Ponte Vecchio data center GPU. Composed of 47 different tiles, some are from TSMC, and some are manufactured by Intel itself, with high parameters.

For example, the complete dual GPU configuration has more than 100 billion transistors, making AMD's Navi 31 look slim. Of course, it is not suitable for any type of desktop PC and is strictly not just a "GPU"—it is a data center processor that emphasizes matrix and tensor workloads.

Before turning to "Xe Next," its Xe-HPG architecture aims to undergo at least two more revisions (Battlemage and Celestial), and we are likely to see the use of tiling technology in Intel's consumer graphics cards.However, for the time being, we will let Ada and Alchemist use traditional monolithic chips for at least one to two years, while AMD will mix chiplet systems for mid-range to high-end cards and for their budget single-chip SKUs.

However, by the end of this century, we may see almost all types of graphics processors built from a series of different tiles/chips, all manufactured using various process nodes. The GPU remains one of the most remarkable engineering feats in desktop PCs—the growth in the number of transistors shows no signs of slowing down, and the computing power of today's ordinary graphics cards could only be dreamed of about 10 years ago.

Kick off the next three-way architectural battle!

Post Comment